Thursday, April 22, 2010

Just Enough or Pack Rat?

A full SDLC development process attracts pack rats. They plan and plan, establish contingency plans and stack the process so they don’t make a mistake. Along the way they pay huge “carrying costs” of keeping an inventories of plans, processes, documents, meetings and calendars. Their users are frustrated by not much delivered in lots of time.

Agile methods are the “just enough” approach. We do as much as we need to deliver great, usable software that delights our users and no more. We’re constantly open to change – able to turn on a dime, because we don’t have a fifty-page plan to revise and four levels of approval to obtain. Our users are thrilled because we spend our time focused on their needs rather than on “process”. We deliver maximum bang for the buck where bang is defined as usable software solving real problems. We have way more fun!

Are you a “just enough” craftsman or a “pack rat”?

TFS In The Cloud

Interested in giving it a try? Ping us.

SQL Server 2008 @ Amazon EC2 “Event 17508 File not found”

(Cross-posted here from an 18 March 2010 post on my personal blog at markr.com.)

Ran into an interesting little glitch this afternoon bringing up SQL Server 2008 at Amazon EC2 using one of their packaged instances. I got this error:

Log Name: Application

Source: MSSQLSERVER

Date: 3/17/2010 10:00:14 PM

Event ID: 17058

Task Category: Server

Level: Error

Keywords: Classic

User: N/A

Computer: ip- xxxxxxxx

Description:

initerrlog: Could not open error log file ''. Operating system error = 3(The system cannot find the path specified.).

Event Xml:

<Event xmlns="http://schemas.microsoft.com/win/2004/08/events/event">

<System>

<Provider Name="MSSQLSERVER" />

<EventID Qualifiers="49152">17058</EventID>

<Level>2</Level>

<Task>2</Task>

<Keywords>0x80000000000000</Keywords>

<TimeCreated SystemTime="2010-03-17T22:00:14.000Z" />

<EventRecordID>1583</EventRecordID>

<Channel>Application</Channel>

<Computer>ip-xxxxx</Computer>

<Security />

</System>

<EventData>

<Data>

</Data>

<Data>3(The system cannot find the path specified.)</Data>

<Binary>A2420000100000000C000000490050002D0030004100460030003300390038004300000000000000</Binary>

</EventData>

</Event>

After a little digging – actually a lot of digging – I discovered this article that says you can get this if the SQL Server machine is also a Windows domain controller. I applied both Workaround 1 and 2 to ALL of the SQLServer* security groups on the domain.

TFS Check-in Policy for Exactly One Work Item

Enforcing work item associations on check-in is vital for assuring traceability. Here’s a handy TFS policy for forcing check-ins to be associated with one and only one work-item.

http://blog.accentient.com/2009/12/15/CustomCheckinPolicyForExactlyOneWorkItem.aspx

Using Subversion With Visual Studio

There’s a nifty tool called VisualSVN, which comes with a separately usable server (free) and VS client plug-in ($49). You can get by with the server and use the included management console plug-in to handle check-in, check-out, branching and merging. The client plug-in delivers nice integration into Visual Studio’s solution explorer and a full-service menu to boot. The server includes Subversion 1.6.9.

If you are switching from TFS to SVN you’ll want to make sure and unbind your solution and component projects from TFS using File –> Source Control –> Change Source Control. If you don’t do this you risk getting confused results, because the normal TFS sub-menu items like “check-in”, “get latest version”, etc. do not map to VisualSVN. SVN is a separate collection of items toward the bottom of the sub-menu for elements in Solution Explorer. Unbinding removes TFS from the Solution Explorer sub-menu.

Myth of Optimization Through Decomposition

This hit me like a freight train when I read it.

In Alan Shalloway's Lean Online Training, we're learning about the Myth of Optimization Through Decomposition, which states that trying to go faster by optimizing each individual piece does not speed up the system.

In the physical world of manufacturing, attempting to run every single machine at 100% utilization results in large piles of unfinished product just sitting around waiting to get through the next step of the pipeline or for a buyer. These unfinished products incur significant costs in terms of inventory and storage. And, whenever the product line is changed or stopped, whatever is sitting in that pipeline winds up being thrown away. This is why physical operations do best when they use a Just In Time strategy -- creating only what they need and no more. It turns out that operating each machine at 100% utilization is actually a really bad business decision.

In the world of software development, the parallel to running every machine at 100% utilization is making sure every employee is busy 100% of the time. And, just like in the physical world, this results in large amounts of unfinished works in progress that incur significant costs and risks. Knowledge degrades quickly, requirements get out of date, the feedback loop is delayed so we don’t learn what we’re doing wrong. The result is unfinished, untested, misunderstood, and often flat-out unnecessary code bogging down our product, degrading its quality, and, actually slowing us down.

- It's difficult implementing a feature that was specified so long ago that no one can remember what it's for.

- It's hard tracking down an error in code developed so long ago that no one remembers how it was implemented.

- It's slow adding new features when the software is muddled with unfinished, untested code (that isn't even needed!).

Thus, Lean teaches us that striving for 100% utilization is not the answer. It doesn't get the product completed any more quickly, and the only thing it creates is waste.

The only way to go faster is the optimize the whole. In other words, find your bottlenecks -- the things that are slowing down the process, incurring delays, and adding waste -- and remove those. And when you do, a funny thing happens, it lets your developers work faster! They're happier, you're happier, and ultimately the customer is happier.

From my own experience I offer some indicators that reveal the truth of this:

It's difficult implementing a feature that was specified so long ago that no one can remember what it's for.

Imagine managing development for 3-4 major products and shared infrastructure, each of which has a product backlog from dozens to over one hundred things! Imagine product owners that want to estimate everything they can imagine in a product over N releases up-front, “so we can inform the contents of each release partially based on how big things are.”

In my experience with, say, e-commerce web applications, writing user stories of any reasonable fidelity, it’s unusual to pack more than ten things into any release. More than that requires too much time for the release or too many people to get the job done in a reasonable time. When you’re done it’s likely that ten more things have appeared that are at least as important to the business as the next ten in the backlog. A backlog longer than ten is waste in this situation.

I need to say something about story fidelity. When I see a backlog of dozens or hundreds I usually see very finely-tuned stories. In my experience, a story that doesn’t stand on its own when implemented in a product is too fine-grained for a product backlog. A story needs to describe a complete picture so that when someone looks at a story two months later, someone unfamiliar with the backlog reads a story, they can quickly and easily understand the feature.

Now, I realize I have made a nasty generalization with my ten-item-backlog example. The point is that a backlog of 100 is pretty darn difficult to prioritize and manage. The backlog simply becomes a list of things someone one day thought were needed. Estimating it is waste. Prioritizing it is probably impossible.

Finally, as a development manager you need to resist aggressive product owners who try and pack as much as they can onto your agenda. The belief is that if every available hour of every resource is planned that we are working at maximum efficiency. Wrong. Dense-packing software development teams like that guarantees lots of overtime or missed deadlines or both. If you schedule everyone to the limit you have no “surge capacity”. Without surge capacity you’re dead; you are forced into nights and weekends and you have grumpy people.

It's hard tracking down an error in code developed so long ago that no one remembers how it was implemented.

I have a rule: Whenever you work on old code you always refactor it to leave it better than you found it. If you’re a good programmer do you ever remember working on old code you couldn’t improve? I don’t.

It's slow adding new features when the software is muddled with unfinished, untested code (that isn't even needed!).

I recently helped my company with some technical due diligence evaluating the acquisition of another company and its software. Company B uses a development process in which they release their product religiously every X weeks pretty much regardless of whether new features are completely finished. They have conditioned their user community to expect partially completed or incompletely tested stuff; indeed they say their users enjoy being treated to “sneak preview” features and Company B uses feedback to improve these features before they are completely done. As a business model this works for them and that is wonderful.

I argue that care needs to be taken with this style of development. It is easy to get distracted and start adding new things without finishing old things leaving code littered with partially completed work. Clever branching might help mitigate this problem, but doing so adds complexity to the development process nevertheless.

How to: Move Your Team Foundation Server from One Hardware Configuration to Another

There are several reasons you may want to move TFS from one platform to another.

- Expansion and growth. Your existing single-server implementation is creaking and sputtering – on old hardware to boot. You’ve got everything on one server.

- You’re upgrading hardware.

- Your system has failed and you need to stand up a new one fast.

- You want to separate a bunch of apps like SharePoint, TFS and SQL Server onto different servers.

The first thing you need to do is read this article about ten times. You need to really understand what it says. I used it to move TFS from a physical server running TFS 2008, SharePoint 2007, Project Server 2007, Team System Web Access and Scrum for Team System to a virtual server last month. If you follow this article you should not have trouble.

The second thing you need to do is reserve a full day to pull this off. Take your time. Check off your progress through each step. Go for a walk every hour or two and collect yourself.

Finally, here’s a tip from my experience. There’s a database on TFS called TfsIntegration. Opening its tbl_service_interface table in SQL Server Management Studio reveals contents that look like this:

On a properly configured system all instances of “mattie” in the above will be the name of your server. If you are using DNS then your domain name should be there.

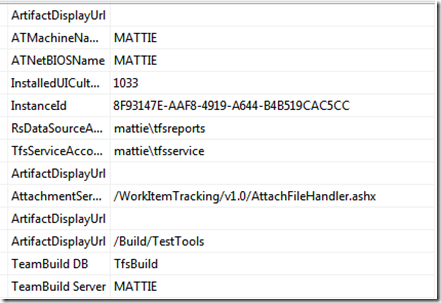

In the same database look inside tbl_Registration_extended_attributes for the following.

Here you want to use the network name of your server, not the domain.

AFTER you complete your migration and test the system backup the TfsIntegration database on the new server. You’ll want to restore it after you make a fresh restoration of the old databases before you “go live” on the new system.